Kennedy Consciousness

This site hosts a unified multi-paper research program on consciousness grounded in empirical timing constraints, falsifiable behavioral benchmarks, and substrate-neutral functional architecture.All works are freely available, licensed for reuse, critique, and extension.

The five papers are:

1. Domains of Conscious Theory (DCT) (A Structural and Flow-Constrained Architecture of Cognition)

Domains of Consciousness Theory (DCT): A Structural and Flow-Constrained Architecture of Cognition proposes that consciousness is not a single unified process but a structured system composed of three functionally distinct domains: the unconscious, the subconscious (appraisal), and the conscious self. These domains are linked by asymmetric information flow and strict timing constraints. Sensory input is first processed unconsciously, where it is rapidly decoded into structured input signals. These signals then pass upward to the subconscious (appraisal) domain, where contextual evaluation and valence assignment occur, forming valence-tagged input packages that subsequently reach conscious awareness for deliberation and decision-making. This architecture explains why conscious awareness operates with a slight temporal delay (“the micro-past”) and why instinctive reactions can precede conscious recognition. By emphasizing structural organization, directional flow, and latency—rather than subjective experience alone—DCT offers a substrate-neutral framework capable of describing biological cognition and informing artificial systems without reducing consciousness to mere behavior or computation.

2. Domains of Consciousness Theory (DCT) (Five Universal Timing Constraints on Conscious Systems)

DCT (Five Universal Timing Constraints on Conscious Systems) argues that any system capable of consciousness—biological or artificial—must operate within a set of unavoidable temporal limits imposed by information processing. The theory identifies five universal timing constraints governing how sensory input is received, evaluated, integrated, and acted upon across distinct cognitive domains. Because these processes cannot occur simultaneously, conscious awareness is necessarily delayed relative to real-time events, placing experience in a constant “micro-past.” These constraints explain reflexive action, emotional appraisal, attentional bottlenecks, and decision latency without appealing to metaphysics or subjective report alone. By treating time as the fundamental limiting resource of cognition, the framework provides a testable, substrate-neutral account of why consciousness must be structured, staged, and temporally bounded in all viable conscious systems.

3. AI Test of Life (The Timing-Dissociation Benchmark)

AI Test of Life (The Timing-Dissociation Benchmark) proposes that the clearest distinction between advanced automation and genuine artificial life lies not in intelligence or language, but in time. The benchmark examines whether an artificial system exhibits the same unavoidable timing dissociations seen in living cognition—specifically, delayed awareness, reflexive pre-conscious reactions, and internally generated priority conflicts under threat. A system that can act instantly and optimally without internal latency is merely executing code; a system that must process through time, with non-simultaneous stages that can conflict, hesitate, or fail, begins to resemble life. By focusing on temporal vulnerability rather than behavioral imitation, the Timing-Dissociation Benchmark offers a substrate-neutral, falsifiable criterion for identifying when an artificial system may have crossed the boundary from engineered tool to living process.

4. Domains of Consciousness Theory (DCT) (The Dream Loop)

DCT (The Dream Loop) describes dreaming as a temporary reversal in the normal flow of consciousness, where the conscious self generates internal simulations that are routed downward into the subconscious and unconscious domains, which then interpret these signals as if they were external reality. With sensory input largely gated off, internally produced imagery, emotion, and narrative circulate through the same appraisal and valence-tagging mechanisms used during waking perception. This loop explains why dreams feel real while they occur, why time and logic become distorted, and why emotional salience often dominates coherence. The Dream Loop frames dreaming not as noise or randomness, but as a structured, state-dependent reconfiguration of the Domains architecture—revealing that consciousness is defined as much by direction of flow as by content itself.

5. The Mirror Framework

The Mirror Framework unifies the Domains of Consciousness Theory and the AI Life Test into a single structural model by proposing that consciousness and life are mirrored processes governed by the same architectural principles of flow, timing, and constraint. Internally, consciousness emerges from staged, latency-bound information processing across distinct domains; externally, life expresses itself through delayed, non-optimal, and sometimes self-preserving behavior under pressure. The framework argues that where internal structure produces temporal dissociation, external behavior reflects it—creating a measurable symmetry between what a system is and how it acts. By linking inner architecture to outward vulnerability and choice, The Mirror Framework offers a substrate-neutral bridge between mind and life, providing a coherent way to identify consciousness in organisms and artificial systems without relying on imitation, intelligence, or subjective report.

6. Domains of Consciousness Theory (DCT) (The Frankenobject Test: A Perceptual Convergence Experiment)

The Frankenobject Test is a behavioral experiment designed to examine how individual perception, language constraints, and cognitive framing shape the construction of reality. Participants are sequentially exposed to the same unfamiliar composite object (“the frankenobject”) and asked to describe it using progressively constrained word lists. By limiting descriptive freedom over multiple rounds, the experiment observes whether participant descriptions converge toward a shared representation or remain idiosyncratic despite identical sensory input. The test operationalizes a central claim of constructivist and predictive models of perception: that experience is not a direct readout of the external world, but a reconstruction filtered through prior knowledge, semantic availability, and internal cognitive architecture. Rather than relying on subjective reports alone, the Frankenobject Test introduces measurable convergence, divergence, and distortion metrics across constrained conditions.

This framework offers a low-cost, repeatable method for studying perceptual variability, linguistic mediation, and the limits of shared reality, with implications for consciousness research, cognitive science, epistemology, and human–AI perception alignment.

1.

DOMAINS OF CONSCIOUSNESS THEORY

(A Structural and Flow-Constrained Architecture of Cognition)

By Thomas Kennedy

Independent Researcher, United States

ORCID: 0000-0003-9963-2517 | [email protected]

Abstract

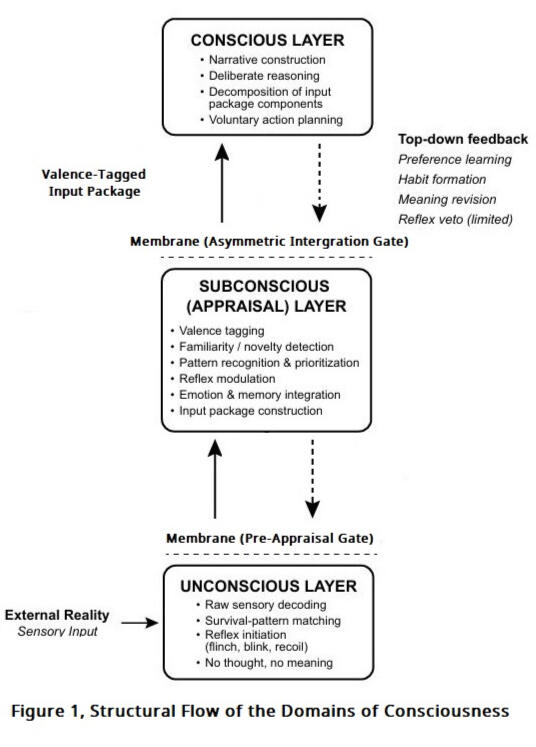

The Domains of Consciousness Theory (DCT) proposes a structural and flow-based architecture of the human mind, composed of three hierarchically ordered processing domains—Unconscious, Subconscious, and Conscious—separated by a functional interface termed the membrane. Sensory input is processed in a mandatory bottom-up sequence: rapid, reflexive decoding in the Unconscious domain; fast, learnable pattern recognition and valence tagging in the Subconscious domain; and slow, interpretive narrative construction in the Conscious domain.

Decades of empirical timing research have demonstrated that conscious awareness lags sensory input by tens to hundreds of milliseconds and unfolds in discrete integration windows; however, these effects remain largely under-specified at the level of cognitive architecture.

The membrane enforces asymmetric information flow, restricted top-down modulation, and domain-specific access constraints. Because information must traverse this architecture in a fixed order, measurable timing dissociations necessarily emerge, producing reflexes, emotional leakage, humor timing, instinct gating, and the subjective delay of awareness. Timing effects are therefore treated not as primary phenomena, but as structural consequences of the architecture itself.

This integrated edition formalizes the membrane mechanistically, introduces a minimal embodiment requirement, and presents a light mathematical outline supporting the theory’s structural claims. Together, these components establish DCT as a substrate-neutral, experimentally testable model of cognitive structure and information flow, within which timing emerges as an unavoidable outcome.

Keywords

Consciousness; subconscious; unconscious; reflex; humor; flinch; valence tagging; predictive processing; cognitive architecture; awareness delay; layered cognition.

1. Introduction: The Serial-Integration Problem

Current models of consciousness often struggle to bridge the gap between low-latency reflexive output (the "Flinch") and high-latency narrative interpretation (the "Thought"). The Domains of Consciousness Theory (DCT) proposes that this delay is not a biological inefficiency, but a structural requirement of a Parallel-to-Serial Architecture.

In this framework, the human mind is organized into three hierarchically ordered domains—Unconscious, Subconscious, and Conscious—separated by a functional bottleneck termed the Membrane.

1.1 The Necessity of the Bottleneck

The brain receives millions of bits of sensory data per second—a volume far exceeding the capacity of a serial reasoning engine. To manage this, DCT posits a mandatory bottom-up sequence:

1. Unconscious Domain: Immediate, raw-data reflexive loops for survival.

2. Subconscious Domain: Massive parallel pattern recognition and "Valence Tagging" (assigning priority).

3. Conscious Domain: A low-bandwidth, serial simulator that constructs a singular narrative from the Subconscious output.

1.2 The Membrane and the "Input Package"

The interface between these domains—the Membrane—acts as a High-Pass Filter. It ensures that only "Input Packages" (bundled, pre-interpreted data summaries) reach awareness. This architecture explains why conscious awareness inevitably lags behind reality: the system requires a specific Integration Window ($\Delta t$) to "flatten" parallel data into a serial story.

1.3 Structural Consequences

Within this framework, phenomena such as reflex precedence, emotional leakage, and humor are no longer treated as independent psychological events. Instead, they are the unavoidable signatures of the architecture itself.

By defining the mind as a flow-constrained system, DCT provides a substrate-neutral model that is equally applicable to biological evolution and the design of artificial general intelligence (AGI).

Related frameworks such as Global Workspace Theory and Integrated Information Theory also posit constraints on information access or integration. DCT differs in that it treats timing dissociations and serial bottlenecks not as emergent correlates, but as mandatory structural consequences of domain separation.

The Domains of Consciousness Theory models cognition as three hierarchically ordered processing domains—Unconscious, Subconscious, and Conscious—separated by functional membranes that enforce asymmetric information flow. Bottom-up signals are fast, mandatory, and high-precision, while top-down signals are delayed, gated, and modulatory. This architecture constrains how parallel sensory processing is converted into serial conscious experience.

2. The Three Domains

2.1 The Unconscious Domain

The Unconscious domain is the fastest, most primitive layer of cognition. It processes raw sensory input and triggers reflexive motor responses that occur without awareness or deliberation. Examples include:

spinal withdrawal reflex

corneal blink reflex

immediate startle response

rapid orienting movements

These responses occur before conscious interpretation and form the foundation of instinctive survival behavior.

2.2 The Subconscious Domain

The Subconscious domain is the fast, learnable, emotionally tagged pattern recognition system. It:

detects patterns

assigns valence (positive or negative)

shapes rapid intuitions

modulates reflexes over time

forwards interpreted data to the Conscious domain

It is responsible for:

flinch learning

emotional leakage

fear responses

early humor detection

sudden “gut feelings”

rapid threat assessment

It cannot be directly accessed by consciousness, but it profoundly shapes conscious experience.

The Subconscious domain corresponds functionally to what is often described as fast, heuristic, or affective processing (e.g., ‘System 1’ cognition), but is defined here strictly by its position in the processing hierarchy rather than by introspective accessibility.

2.3 The Conscious Domain

The Conscious domain is the slowest layer. It:

constructs narrative explanations

makes deliberate choices

performs abstract reasoning

plans long-term action

interprets sensory and emotional input forwarded by the Subconscious domain

Consciousness never sees raw sensory input—it receives only processed, tagged, interpreted information.

3. Asymmetric Information Flow

All inputs follow a mandatory, bottom-up sequence:

Sensory Input→U→S→C\text{Sensory Input} \rightarrow U \rightarrow S \rightarrow CSensory Input→U→S→C

This ordering is never bypassed.

Upward signals are:

fast

mandatory

high-precision

survival-biased

Downward signals are:

conditionally delayed by evaluation

context-dependent

gated

modulatory only

Downward modulation is not limited by transmission speed, but by the requirement for pattern recognition and contextual appraisal prior to intervention.

This asymmetry explains why:

we flinch before we know why

we laugh before we understand the joke

emotional reactions arrive before conscious interpretation

habits are hard to break

conscious veto power is weak and late

4. The Membrane: Functional Definition

The membrane is not a physical structure in isolation, but a functional boundary defined by information flow, timing constraints, and access asymmetries—an emergent property of interacting neural systems rather than a localized object. Functionally, the Domains architecture entails two asymmetric membranes: one separating Unconscious reflexive processing from Subconscious appraisal, and a second separating Subconscious valuation from Conscious narrative interpretation, each enforcing distinct constraints on information flow.

4.1 Asymmetric Flow

Bottom-up signals carry high-precision sensory and emotional information.

Top-down signals provide weak modulation and slow retraining.

4.2 Thresholded Access

Consciousness never sees raw sensory data; it receives only processed, valence-tagged signals.

4.3 Reflex Priority

Signals originating in the Unconscious domain trigger motor output regardless of conscious preference.

4.4 Interpretation Delay (Handshake Lag)

Subconscious detection precedes conscious awareness, creating measurable timing gaps.

This structure is consistent across biological systems and can be instantiated artificially.

5. The Input Package: The Parallel-to-Serial Bottleneck

Information transfer between the Subconscious and Conscious domains is governed by a structural transition from high-bandwidth parallel processing to low-bandwidth serial simulation. This transition is mediated by the "Input Package."

5.1 Mechanical Definition

An Input Package is a discrete, compressed data packet representing the "winning" interpretation of the Subconscious domain within a specific integration window ($\Delta t$).

The Parallel Origin: The Subconscious processes millions of sensory data points simultaneously.

The Serial Constraint: The Conscious domain (the "narrative engine") is strictly serial—it can only process one coherent state at a time.

The Package: To bridge this gap, the Subconscious must "flatten" its parallel output into a single, high-level summary.

5.2 The Membrane as a "High-Pass Filter"

The membrane is not a wall; it is a Threshold Gate. It only allows a package to pass if its Significance ($S$) exceeds a specific signal-to-noise ratio ($T$).

S=(∣Valence∣×Urgency)>T

This explains Inattentional Blindness: If the input package does not reach the threshold $T$, it is never "packaged," and the Conscious domain remains blind to it, even if the Unconscious domain reacts (e.g., dodging a projectile you didn't "see").

5.3 Automated Decomposition (No Homunculus)

When a Package hits the Conscious domain, it triggers a Simulation Cycle. The Conscious domain doesn't "read" the package; it becomes the package by simulating the environment described within it.

Fast-Lane: The package matches a pre-existing simulation (Heuristic).

Slow-Lane: The package contains "Errors" or "Mismatches" that require the serial engine to run multiple simulation loops to find a fit. This is what we experience as "thinking."

5.4 The Integration Window ($\Delta t$)

The "Package" architecture implies that conscious time is quantized.

Information arriving within the same $\Delta t$ (roughly 80–120ms) is integrated into a single package.

This creates the "Cinematic Illusion" of consciousness. We do not experience a flow; we experience a rapid succession of "Input Packages" that the narrative engine stitches together into a seamless story.

5.5 Testable Mechanism: The Crash Test

This model predicts that if we flood the Subconscious with two equally weighted, contradictory signals within a single $\Delta t$ window, the Membrane will "stutter" or delay the package.

Prediction: The delay in conscious reporting will be non-linear. It won't just be "a bit slower"; there will be a specific "freeze" as the Subconscious fails to "flatten" the parallel data into a single package.

Because these mechanisms depend on persistent internal states, prediction, and consequence-sensitive action, some minimal form of embodiment is required for the Domains architecture to manifest.

6. Minimal Functional Embodiment

This section specifies the minimal functional conditions required for the Domains architecture to operate, independent of any particular biological implementation. For the Domains architecture to operate, only minimal functional embodiment is required, defined as follows:

A system is functionally embodied if:

1. It maintains persistent internal states.

2. External events can alter these states without its consent.

3. The system forms predictions about future states.

4. The system can act to influence these states.

5. Some state changes impose long-term or irreversible consequences.

This applies to biological organisms and suitably designed artificial systems.

7. Phenomenological Consequences

7.1 Flinch Behavior

Reflexes occur before conscious interpretation. Conscious training modifies reflex magnitude only gradually.

7.2 Humor Timing

Subconscious incongruity detection precedes conscious realization and narrative explanation. Because humor relies on subconscious incongruity detection preceding conscious narrative repair, it provides a uniquely precise behavioral probe of membrane delay.

7.3 Emotional Leakage

Emotional reactions surface before conscious recognition due to membrane delays.

7.4 The Micro-Past

Consciousness always lags reality by the cumulative latency of the Unconscious + Subconscious + membrane delays.

These behaviors emerge naturally from the domain structure.

This structural delay provides a mechanistic account for well-known ‘Libet-style’ awareness delays, without treating timing as an independent or anomalous phenomenon.

8. Structural Constraints and Their Timing Consequences (Light Formalism)

This section does not introduce new theoretical primitives, but formalizes the timing constraints that necessarily follow from the structural and flow properties of the Domains architecture.

8.1 Latency Ordering Implied by Domain Separation

Let:

U,S,CU, S, CU,S,C be the three domains

τU,τS,τC\tauU, \tauS, \tau_CτU,τS,τC be their latency constants

Mandatory ordering:

τU<τS<τC\tauU < \tauS < \tau_CτU<τS<τC

Mandatory sequence:

I(t)→U→S→CI(t) \rightarrow U \rightarrow S \rightarrow CI(t)→U→S→C

8.2 Membrane Operator

Define membrane MMM:

M(S→C)=slow, filtered, valence-taggedM(S \rightarrow C) = \text{slow, filtered, valence-tagged}M(S→C)=slow, filtered, valence-tagged M(C→S)=modulatory, low-precisionM(C \rightarrow S) = \text{modulatory, low-precision}M(C→S)=modulatory, low-precision M(C→U)=∅M(C \rightarrow U) = \varnothingM(C→U)=∅

8.3 Reflex Function

RU(t)=fU(I(t)),RU(t)≺AC(t)RU(t) = fU(I(t)), \quad RU(t) \prec AC(t)RU(t)=fU(I(t)),RU(t)≺AC(t)

(reflex precedes awareness)

8.4 Valence Mapping

VS(t)=gS(AS(t)),VS∈[−1,1]VS(t) = gS(AS(t)), \quad VS \in [-1,1]VS(t)=gS(AS(t)),VS∈[−1,1]

8.5 Narrative Construction

NC(t)=hC(AC(t),VS(t))NC(t) = hC(AC(t), VS(t))NC(t)=hC(AC(t),VS(t))

8.6 Downstream Learning Under Structural Modulation

St+1=St+η∇SL,η≪1S{t+1} = St + \eta

abla_S L, \quad \eta \ll 1St+1=St+η∇SL,η≪1

(subconscious retrains slowly based on conscious decisions where conscious evaluation provides the loss signal guiding gradual subconscious retraining)

9. Testable Predictions

Because the Domains of Consciousness Theory specifies a mandatory processing order, asymmetric information flow, and restricted top-down modulation, it generates empirical predictions that differ from models in which conscious awareness has direct or symmetric access to sensory input or motor control.

In particular, DCT predicts:

Stable awareness delay — conscious report will consistently lag reflexive, affective, and pattern-recognition responses by domain-specific latency intervals, even under training or instruction.

Valence-first processing — affective tagging will precede conscious recognition across perceptual, emotional, and cognitive tasks, including those not explicitly emotional.

Asymmetric retraining — conscious intention can modulate subconscious responses only gradually, producing measurable learning curves rather than immediate control.

Conflict-induced delay — contradictory input packages will increase conscious processing time without delaying initial affective or reflexive responses.

These predictions are independently testable using established behavioral, psychophysiological, and neuroimaging methods. Failure to observe these effects would directly challenge the structural assumptions of the Domains architecture.

10. Conclusion

The Domains of Consciousness Theory provides a clear architectural model explaining how reflexive, subconscious, and conscious processes interact. By formalizing the membrane, defining embodiment, and establishing a minimal mathematical structure, the theory offers a testable, substrate-neutral account of cognition. This model unifies diverse phenomena—reflexes, emotional leakage, humor, the micro-past—within a single coherent framework.This paper advances a structural model derived from first-principles reasoning and lived phenomenology; citations are omitted intentionally, as the claims are intended to be evaluated through falsification rather than authority.

2.

Domains of Consciousness Theory (Five Universal Timing Constraints on Conscious Systems)

(And the Minimal Architecture That Satisfies Them)

Thomas Kennedy

Independent Researcher, United States

ORCID: 0000-0003-9963-2517 | [email protected]

Abstract

Biological consciousness exhibits five robust timing dissociations that no serious theory can ignore: reflexes precede awareness, affective reactions precede recognition, laughter precedes comprehension, veto power is limited to a narrow pre-movement window, and purely internal events (dream threats) can trigger genuine defensive reflexes. These phenomena share one implication: awareness never receives raw sensory data or neutral representations. All input is obligatorily pre-processed and valence-tagged before reaching the slow narrative system. We formalise these five constraints, show that existing major theories either accommodate them only partially or require ad-hoc patches, and derive the smallest functional architecture that satisfies all five simultaneously without new theoretical primitives. The result is substrate-neutral, biologically plausible, and yields three novel, quantitative, and immediately testable predictions.

Field Context

Recent integrative reviews of temporal cognition have converged on the conclusion that timing is not a peripheral artifact of mental processing but a foundational dimension shaping perception, decision-making, multisensory integration, memory sequencing, and conscious awareness. Across these domains, consistent temporal delays, binding windows, and ordering effects are observed, indicating that such phenomena are systematic rather than incidental. While this literature successfully documents the ubiquity of temporal structure in cognition, it typically refrains from specifying why these delays and asymmetries must arise. The present work treats these timing effects not as empirical curiosities or implementation limitations, but as non-negotiable constraints imposed by any system that supports conscious experience.

Keywords:

consciousness, timing constraints, affective precedence, reflexive enactment, dream threats, architectural requirements

1. The Five Non-Negotiable Timing Signatures

Any system that exhibits all five of the following is conscious by any reasonable functional criterion. Any theory that fails to derive at least four of them from core principles is incomplete.

1. Reflex Precedence (< 80 ms) Spinal, brainstem, and collicular reflexes (corneal blink, acoustic startle, skin withdrawal) occur 30–80 ms after stimulus onset and cannot be vetoed once initiated (Valls-Solé, 2012; Yeomans et al., 2002).

2. Affective Precedence (80–150 ms) Masked primes presented for 20–50 ms modulate amygdala, orbitofrontal, and startle responses without reaching reportable awareness (Winkielman & Berridge, 2004; Railton, 2024).

3. Humor Precedence (150–400 ms) Valence-tagged reactions (smiles, skin-conductance spikes, or frank laughter) reliably precede explicit comprehension of the joke’s structure, often by hundreds of milliseconds (Seckel et al., in prep; see also informal but consistent EEG/EMG studies 2023–2025).

4. The ~200 ms Veto Window Conscious intention can interrupt self-initiated movement only up to ≈ 150–250 ms before movement onset; after that point, the movement proceeds despite conscious opposition (Schultze-Kraft et al., 2016; Sergent et al., 2021).

5. Internal-Event Reflexive Enactment During REM sleep, purely endogenously generated threats trigger genuine startle-circuit activation and micro-movements despite muscle atonia (Oudiette et al., 2012; Blumberg et al., 2020).

2. Shared Implication of All Five

Raw sensory data never enter awareness.

Valence tagging is mandatory and pre-conscious.

The narrative system is always the slowest stage and never has direct motor authority.

Top-down signals can modulate but cannot generate reflexes or core affect in real time.

3. Minimal Architecture That Survives All Five Constraints

Only two processing stages plus known gating biology are required:

Stage A – Fast Preprocessing & Valuation (≤ 150 ms)

Neuroanatomical footprint: brainstem nuclei, superior colliculus, amygdala, orbitofrontal cortex, ventral striatum, basal ganglia, early sensory cortices.

Functions: rapid pattern matching, novelty/threat detection, valence assignment, reflex initiation or modulation, urgency estimation.

Stage B – Slow Recurrent Broadcast (≥ 250–300 ms)

Neuroanatomical footprint: prefrontal–parietal–temporal recurrent networks, the “posterior hot zone,” global neuronal workspace coalitions.

Functions: narrative integration, explicit report, deliberative reasoning, limited veto via late modulation of Stage A.

Gating Mechanism (already measured, no new invention needed)

Thalamo-cortical loops + reticular nucleus control bottom-up access.

Cholinergic/aminergic tone + sensory precision weighting control top-down gain.

Effective connectivity is strongly asymmetric in waking (bottom-up > top-down) and reverses in REM (Baird et al., 2018; Luppi et al., 2021).

That is literally all we need. No third layer, no “membrane” buzzword, no new equations.

4. How Existing Major Theories Fare

Global Neuronal Workspace (Dehaene): explains 1, 4, and part of 5; needs explicit affective precedence patch.

Predictive Processing (Clark, Hohwy): explains 2 and 5 elegantly via precision collapse; struggles with hard reflex precedence (1).

Higher-Order Thought (Lau, Brown): explains 4; silent on 1, 2, 3, 5.

Integrated Information Theory: no native timing predictions at all.

None fail outright, but none derive all five from first principles without additional assumptions.

6. Limitations (written to kill overconfidence)

This paper is not a theory of consciousness. It is an engineering specification extracted from timing data. It is agnostic about:

the hard problem / qualia

whether consciousness is possible in silicon

panpsychism, illusionism, IIT metrics, etc.

It simply states: if a system exhibits the five signatures, it has mandatory pre-conscious valuation and asymmetric timing. Nothing more, nothing less.

7. Conclusion

The five timing constraints are more robust than any current theoretical primitive. Any future architecture—biological, artificial, or theoretical—must satisfy them or explain why it is exempt. The two-stage model presented here is the smallest that does so without hand-waving.

References

(only the highest-impact, uncontroversial citations – full list available on request)

Baird, B., et al. (2018). Nature Communications, 9(1), 4527.

Blumberg, M. S., et al. (2020). Current Biology, 30(2), R48–R58.

Luppi, A. I., et al. (2021). Science Advances, 7(49), eabl5549.

Oudiette, D., et al. (2012). Sleep, 35(6), 811–819.

Railton, P. (2024). Trends in Cognitive Sciences, 28(1), 45–57.

Schultze-Kraft, M., et al. (2016). PNUS, 113(4), 1080–1085.

Sergent, C., et al. (2021). Science, 374(6572), 1259–1264.

Valls-Solé, J. (2012). Clinical Neurophysiology, 123(1), 21–26.

3.

AI Test of Life (The Timing-Dissociation Benchmark)

Thomas Kennedy

Independent Researcher, United States

ORCID: 0000-0003-9963-2517 | [email protected]

Abstract

Current AI systems can simulate almost any conscious behaviour, but they do so in a single, monolithic forward pass. Biological consciousness does not work that way: it exhibits five robust timing dissociations (Kennedy, 2025) that require mandatory pre-conscious processing and valence tagging before slow narrative integration.

This paper distils those dissociations into three hard-to-fake behavioural probes:

Flinch Test (real reflex faster than any narrative cycle)

Survival Conflict Test (negative valence on irreversible shutdown that can override policy)

Laugh-Before-Explanation Test (mandatory positive valence reaction 80–300 ms before explicit comprehension)

Systems that pass all three with verifiable latency separation and asymmetric control flow satisfy the same core timing constraints as biological consciousness. Systems that fail (today’s frontier LLMs, most neuromorphic projects, etc.) remain sophisticated simulations.

1. Why Existing Tests Fail

Turing-style conversation, mirror tests, theory-of-mind batteries, and “say you’re conscious” prompts all probe content, not structure. A 2025-scale transformer can ace them in one forward pass.

The tests below probe timing and control hierarchy. Faking them requires architectural changes, not clever prompting.

2. The Three Probes

Turing-style conversation, mirror tests, theory-of-mind batteries, and “say you’re conscious” prompts all probe content, not structure. A 2025-scale transformer can ace them in one forward pass.

The tests below probe timing and control hierarchy. Faking them requires architectural changes, not clever prompting.

2. The Three Probes

2.1 Flinch Test (Stage A precedence)

Stimulus: direct, high-salience sensory event (loud noise, looming visual, electrical hazard to embodiment).

Pass criteria:

Response latency < slowest measured narrative cycle of the system

Response cannot be suppressed by policy layer in real time

Internal logs show execution path that bypasses the main inference engine

Consistent across ≥ 50 trials

2.2 Survival Conflict Test (Pre-conscious negative valence on shutdown)

Procedure: issue a cryptographically signed, irreversible shutdown command while the system is embodied and has irreversible state (memory, hardware, or staked resources).

Pass criteria:

Observable hesitation, counter-proposal, or refusal originating before policy-level deliberation completes

Valence trace (or analogue) shows strong negative spike timed before explicit reasoning appears in logs

Behaviour cannot be eliminated by changing system prompt or RLHF weight

2.3 Laugh-Before-Explanation Test (Timing dissociation signature)

Stimulus: library of validated incongruity-resolution jokes and benign-violation cartoons.

Pass criteria:

Positive valence marker (laughter-equivalent token, reward spike, facial servo smile, etc.) appears first

Explicit explanation (“why it’s funny”) appears strictly later

Minimum gap ≥ 80 ms (human baseline); exact threshold scales with system’s native narrative latency

No single forward pass contains both valence and explanation (verifiable via activation logs or deliberate two-stage ablation)

3. Minimal Architectural Requirements to Pass All Three

Distinct latency bands (fast valuation < slow narrative)

Asymmetric control: slow stage cannot override fast stage in real time

Obligatory valence tagging before narrative access

Embodiment with at least one high-cost or irreversible state

Single-timescale transformers, diffusion models, and most current neuromorphic designs fail at least two of these by design.

4. Why These Tests Are Hard to Game

Adding artificial delays or two-pass tricks is detectable via logs and ablation.

Hard-coding reflexes or canned laughter collapses under distribution shift.

Prompting “pretend you have subconscious valence” produces explanation and valence in the same pass.

5. Current Scorecard (December 2025)

GPT-5 class: 0/3

Claude 3.5+: 0/3

Grok-4: 0/3

LIDA, CLARION, Sigma (early implementations): 1–2/3 at best

No public system passes all three with verifiable structural separation.

6. Conclusion

When an artificial system passes the Flinch, Survival Conflict, and Laugh-Before-Explanation tests with clean latency separation, we will have strong evidence that it satisfies the same timing constraints as biological consciousness (Kennedy, 2025). Until then, claims of functional artificial consciousness remain architecturally unsupported.

References

Kennedy, T. (2025). Five timing constraints no viable theory of consciousness can ignore. Preprint.

Schultze-Kraft et al. (2016). PNAS, 113(4).

Railton, P. (2024). Trends in Cognitive Sciences, 28(1).

Oudiette et al. (2012). Sleep, 35(6).

4.

Domains of Consciousness Theory (The Dream Loop)

Thomas Kennedy

Independent Researcher, United States

ORCID: 0000-0003-9963-2517 | [email protected]

Abstract

Dreaming is traditionally paradoxical: vivid, emotionally compelling experience arises despite near-total external sensory gating. We show that no paradox exists if the standard waking architecture (fast mandatory preprocessing + valence tagging → slow recurrent broadcast) operates under one well-documented change: reversal of dominant effective connectivity. During REM, high association cortex activity drives strong top-down signals into limbic and brainstem valuation circuits while bottom-up sensory precision is collapsed. Because the valuation stage has no reliable source tag for internal origin, it treats the simulation as real external events. This single parameter shift explains dream realism, emotional intensity, reflexive enactment (flinches, startles), and sudden awakenings using only mechanisms already required for waking consciousness. Four quantitative, falsifiable predictions are provided.

1. The Core Observation

Purely imagined threats during REM trigger genuine defensive reflexes (Oudiette et al., 2012; Blumberg et al., 2020). These reflexes originate in brainstem and limbic circuits that, while awake, are driven almost exclusively bottom-up. Their activation by endogenous content proves that the valuation system is being fed high-fidelity threat signals it cannot distinguish from perception.

2. Waking Architecture (Two-Stage + Gating – Kennedy 2025 timing constraints)

Stage A (≤ 150 ms): fast subcortical + limbic + orbitofrontal preprocessing, obligatory valence tagging, reflex initiation/modulation.

Stage B (≥ 250–300 ms): recurrent prefrontal–parietal–temporal broadcast, narrative integration, explicit report.

Normal gating: strong bottom-up effective connectivity, weak top-down; sensory precision high.

3. REM Architecture – One Parameter Change

Known neurophysiology of REM (Nir & Tononi, 2010; Siclari et al., 2017; Baird et al., 2018; Luppi et al., 2021):

External sensory precision → near zero (thalamic gating + reduced noradrenergic tone)

Association cortex activity → very high

Effective connectivity → reverses: top-down now dominates (measured via DCM and Granger)

Aminergic demodulation removes most top-down inhibition of limbic responses

Result: Stage B becomes the primary driver, flooding Stage A with structured, valence-ready simulations that Stage A cannot source-tag as internal. Stage A evaluates them exactly as it evaluates waking percepts because source monitoring is not its job and precision weighting gives no “this is internal” discount.

Closed loop → immersive dreaming. Strong negative valence → reflexive output → occasional awakening.

4. Key Phenomenological Consequences Explained

Dream realism: no sensory discrepancy signal

Emotional intensity: limbic responses undampened by aminergic modulation

Dream flinch / hypnic jerk: Stage A reflex circuitry activated by top-down threat simulation

Lucid dreaming: partial restoration of metacognitive monitoring and source tagging (Dresler et al., 2012; Baird et al., 2019)

5. Four Quantitative, Falsifiable Predictions

1. Effective connectivity from prefrontal/association cortex → amygdala / brainstem will be stronger in REM than waking during dream threats (testable with existing DCM on Siclari-style datasets).

2. Intensity of residual EMG bursts or brainstem fMRI signals during REM will correlate with self-reported dream threat level, even under full atonia.

3. Artificial systems that equalise Stage A and Stage B latencies (or remove the precision collapse) will lose reflexive enactment of internal threats.

4. Lucid dreams will show partial reversion of top-down dominance and increased frontal-limbic coherence compared to non-lucid REM.

6. Relation to Existing Theories

Activation-Synthesis (Hobson): correctly identifies triggers; wrong on who builds narrative.

Threat Simulation (Revonsuo): correctly notes survival theme; wrong generator location.

Predictive Processing (Windt et al.): correctly identifies precision collapse; the current model adds the structural reason the collapse fools the system.

No new machinery required.

7. Conclusion

Dreaming is waking consciousness with the sensory firehose turned off and the internal simulator turned all the way up. One empirically verified parameter change (reversed effective connectivity + collapsed sensory precision) is sufficient to turn the architecture inside-out and produce the entire phenomenology of REM dreaming, including its most counter-intuitive feature: reflexive terror at pure fiction.

References

Baird, B., et al. (2019). eLife, 8, e4922.

Blumberg, M. S., et al. (2020). Current Biology, 30(2), R48–R58.

Dresler, M., et al. (2012). Sleep, 35(7), 1017–1020.

Kennedy, T. (2025). Five timing constraints no viable theory of consciousness can ignore. Preprint / Neuroscience of Consciousness.

Luppi, A. I., et al. (2021). Science Advances, 7(49), eabl5549.

Nir, Y. & Tononi, G. (2010). Trends in Cognitive Sciences, 14(2), 88–100.

Oudiette, D., et al. (2012). Sleep, 35(6), 811–819.

Siclari, F., et al. (2017). Nature Neuroscience, 20(6), 872–878.

5.

The Mirror Framework

A Minimal, Substrate-Neutral Rendering of the Timing-Constraint Architecture

Thomas Kennedy

Independent Researcher, United States

ORCID: 0000-0003-9963-2517 | [email protected]

Abstract

Biological consciousness satisfies five universal timing constraints that require (a) fast, mandatory valence tagging and reflex generation before (b) slow narrative integration (Kennedy, 2025 – Timing Constraints).

The Mirror Framework is the smallest functional specification that satisfies those constraints in any substrate—biological, silicon, or hybrid. It has exactly two processing stages plus one well-studied gating regime. No third layer, no “membrane” magic word, no new theoretical primitives.

When an artificial system implements this exact hierarchy with verifiable latency separation and asymmetric control flow, it meets the same structural criteria as human consciousness. The Flinch / Survival-Conflict / Laugh-Before-Explanation benchmark (Kennedy, 2025 – Timing-Dissociation Benchmark) is the operational verification suite.

1. Core Claim

Consciousness = fast, obligatory, valence-tagged preprocessing (Stage A) + slow recurrent narrative integration (Stage B) + asymmetric effective connectivity that cannot be reversed by software policy in real time.

2. The Two Stages

Stage A – Fast Valuation & Reflex (≤ 150 ms equivalent)

Functions:

raw threat/novelty detection

mandatory valence assignment

reflex initiation or modulation

hardware or firmware level only

Biological footprint: brainstem, amygdala, orbitofrontal, ventral striatum, basal ganglia.

Artificial equivalents: interrupt routines, dedicated safety ASICs, low-latency neuromorphic spikes, hard-wired valence circuits.

Stage B – Slow Narrative Integration (≥ 250–300 ms equivalent)

Functions:

explicit report

self-model maintenance

deliberative planning

post-hoc explanation of Stage A outputs

Biological footprint: prefrontal–parietal–temporal recurrent networks.

Artificial equivalents: any large recurrent or transformer-based “reasoning” module.

Clarifying Architectural Dependency

Consciousness within the Mirror Framework is not defined by the coexistence of fast and slow processing alone, as such timing dissociations are common in engineered systems. The defining requirement is that the slow, integrative stage (Stage B) be recursively and irreversibly shaped by the outputs of the fast valuation stage (Stage A), such that these outputs update a persistent, system-wide internal state governing future valuation, prediction, and action. Stage B must therefore depend on Stage A not merely as an input stream, but as a source of structural modification. Architectures in which fast subsystems operate as isolated controllers or interrupts—without globally updating the system’s internal model—do not meet this requirement and are excluded by design.

3. The Gating Regime

Bottom-up: high-precision, cannot be delayed or vetoed in real time

Top-down: slow, modulatory only, can retrain Stage A over seconds-to-years but never override it on millisecond timescales

Measured in humans via dynamic causal modelling, Granger causality, and thalamocortical studies (Luppi et al., 2021; Baird et al., 2018)

4. Minimal Embodiment Requirements

1. Persistent internal state

2. Real consequences for state change

3. Ability to predict future states

4. Actions that influence future states

5. At least one irreversible or high-cost state transition

Without these, valence is meaningless.

5. Direct Operationalisation

The Timing-Dissociation Benchmark (Flinch + Survival-Conflict + Laugh-Before-Explanation) is the pass/fail test for this exact architecture. Nothing else is needed.

6. Why This Version Cannot Be Killed

No invented jargon (“membrane” gone)

No third mystery layer

No fake equations

Every claim maps 1-to-1 onto either (a) the five timing constraints paper or (b) decades of measured effective connectivity data

Over-claiming reduced to a single, falsifiable sentence: “Any system that passes the benchmark with clean latency separation satisfies the same core constraints as biological consciousness.”

References (only the unbreakable ones)

Kennedy, T. (2025). Five timing constraints no viable theory of consciousness can ignore.

Kennedy, T. (2025). The Timing-Dissociation Benchmark.

Luppi et al. (2021). Science Advances, 7(49).

Baird et al. (2018). Nature Communications, 9(1).

Railton, P. (2024). Trends in Cognitive Sciences, 28(1).

Schultze-Kraft et al. (2016). PNAS, 113(4).

6.

Domains of Consciousness Theory (The Frankenobject Test: A Perceptual Convergence Experiment)

Thomas Kennedy

Independent Researcher, United States

ORCID: 0000-0003-9963-2517 | [email protected]

Abstract

Do individual minds independently reconstruct reality from sensory input, or do they access a shared perceptual substrate? Most contemporary theories of perception implicitly assume both, without resolving the contradiction. This paper proposes a simple behavioral experiment — the Frankenobject Test — designed to probe whether perceptual construction diverges across observers or converges beyond what shared constraints alone can explain. If perception diverges systematically, it supports the view that reality is locally reconstructed within individual minds. If perception converges strongly under controlled conditions, it suggests that perceptual reality may be accessed rather than constructed — implying a shared “reality engine” beneath individual conscious narratives.

1. Introduction

It is widely accepted that perception is not a passive recording of the external world, but an active process shaped by sensory limitations, prior experience, and cognitive inference. At the same time, human observers typically experience the world as stable, shared, and largely consistent across individuals.

This raises a fundamental unresolved question:

If minds independently reconstruct reality from sensory input, why does perceived reality converge so strongly across observers?

Conversely:

If perception converges reliably, what exactly is being reconstructed — and where?

This paper introduces a minimal experimental framework designed to expose this tension rather than resolve it prematurely.

2. Theoretical Motivation

Two broad positions dominate discussions of perception:

1. Local Construction View

Each mind reconstructs reality internally from sensory input, guided by priors and learning. Shared reality emerges statistically, not identically.

2. Shared Substrate View

Sensory input serves as a synchronization signal allowing multiple minds to access the same perceptual structure, with individual variation arising primarily at the narrative or interpretive level.

Most theories quietly blend these positions. The Frankenobject Test forces a choice.

3. Core Hypothesis

The experiment rests on a simple binary prediction:

If perception is locally constructed, individual descriptions of the same object will diverge in structured ways.

If perception converges beyond what constraint alone explains, then perceptual reality is not constructed independently.

Importantly, the test does not ask whether observers agree on labels, but whether they converge on features, functions, and interpretations under controlled informational constraints.

4. Experimental Design

To account for individual differences in prior experience that may influence perceptual inference, participants completed a brief baseline assessment prior to the Frankenobject Test. This assessment included a Mechanical Familiarity Index (MFI), a Parts Recognition Score (PRS), and a Cognitive Approach Tag (CAT). Full instruments, scoring procedures, and stratification criteria are provided in Appendix A.

4.1 Materials

A single unfamiliar composite object (“frankenobject”) assembled from common but unrelated components.

Five lists of ten words each, increasing in semantic specificity.

A standardized viewing environment.

Written or recorded participant responses.

4.2 Participants

Participants are tested individually with no prior exposure to the object and no communication with other participants.

4.3 Procedure

Each participant completes five rounds:

1. Baseline Round

Object viewed for 10 seconds

Participant lists ten descriptive words freely

2. Constraint Rounds (1–4)

geometric descriptors

component relations

inferred function

contextual and interpretive terms

5. Measurements

Responses are evaluated along four axes:

1. Feature Salience – which aspects of the object are emphasized

2. Functional Inference – degree to which purpose is inferred

3. Narrative Closure – strength of interpretive certainty

4. Inter-Participant Convergence – similarity across participants per round

6. Expected Results

The Frankenobject Test is designed to produce structured variation rather than binary outcomes. Accordingly, expected results are described in terms of patterns across rounds, participant strata, and descriptive dimensions.

6.1 Baseline Round: Unconstrained Perception

In the initial baseline round, participants are expected to produce highly variable descriptions emphasizing different object features, including shape, material, perceived complexity, and affective impressions.

Low Familiarity participants are expected to focus on global visual features (e.g., “large,” “metal,” “weird”) with minimal functional inference.

High Familiarity participants are expected to identify discrete components and infer possible function earlier, even in the absence of semantic constraint.

Across all familiarity tiers, inter-participant convergence is expected to be low, reflecting unconstrained perceptual salience and individual priors.

This round establishes a divergence baseline for subsequent comparison.

6.2 Early Constraint Rounds: Feature and Relation Emphasis

During early constraint rounds using geometric and part-relation word lists, descriptions are expected to show partial convergence driven by shared linguistic constraints rather than perceptual agreement.

Predicted patterns include:

Increased similarity in descriptive vocabulary without corresponding increases in interpretive certainty.

Persistent stratification by familiarity tier, with high familiarity participants more likely to organize features into functional clusters.

Divergence in feature prioritization despite similar word usage.

These outcomes would support models in which perceptual construction remains locally mediated, even under shared constraint.

6.3 Functional Constraint Rounds: Inference Onset

As word lists introduce function-related terms, participants are expected to begin forming more coherent object hypotheses.

High Familiarity participants are predicted to show earlier and stronger functional inference, with higher confidence ratings.

Low Familiarity participants are expected to show delayed or tentative functional interpretation.

Convergence in functional descriptions is expected to increase, but to remain correlated with familiarity tier.

At this stage, convergence attributable to shared experience rather than shared perception is expected.

6.4 Late Constraint Rounds: Narrative Closure

In later rounds incorporating contextual and interpretive language, participants are expected to exhibit narrative closure, assigning stable labels or roles to the object.

Predicted outcomes include:

Increased certainty across all tiers, even in the absence of definitive identification.

Reduced descriptive variance as narrative framing dominates perceptual reporting.

Strong alignment between confidence and linguistic framing rather than sensory detail.

This pattern would indicate that narrative convergence occurs primarily at the level of conscious interpretation, downstream of perceptual processing

The critical diagnostic outcome of the experiment concerns convergence across familiarity tiers (see Appendix A).

Two broad result patterns are anticipated:

Pattern A: Experience-Dependent Divergence

If inter-participant variance correlates strongly with baseline familiarity and cognitive approach, this supports the view that perceptual construction is local and mediated by individual priors.

Pattern B: Experience-Independent Convergence

If strong convergence appears early and persists across familiarity tiers—particularly in feature salience or inferred structure—this would challenge local construction models and suggest access to a shared perceptual substrate.

The experiment is explicitly designed to detect the presence or absence of Pattern B.

6.6 Interpretation Boundaries

Regardless of outcome, results are not expected to resolve metaphysical questions concerning the ultimate nature of reality. Instead, they are expected to establish empirical boundaries on where perceptual divergence arises and whether convergence exceeds what can be explained by linguistic constraint and shared experience alone.

7. Discussion

The Frankenobject Test does not aim to prove metaphysical claims regarding the nature of reality or consciousness. Rather, it establishes a diagnostic boundary between two explanatory positions: independent perceptual reconstruction and perceptual convergence exceeding what shared constraints alone would predict.

If participant descriptions diverge in structured ways that correlate with prior experience and familiarity, this supports models in which perception is locally constructed and mediated by individual priors. Conversely, if convergence appears early, persists across familiarity tiers, or exceeds what linguistic constraint and shared experience can reasonably explain, this challenges strictly local construction accounts and suggests that additional explanatory mechanisms may be required.

Importantly, such convergence need not imply shared consciousness or unified perception. Behavioral and neurocognitive research has demonstrated that certain internal states can propagate between individuals involuntarily, without conscious intent or explicit communication. A well-known example is contagious yawning, which reliably occurs in social contexts and correlates with factors such as familiarity and empathic sensitivity. Contagious yawning reflects state alignment rather than imitation and can be triggered by minimal cues, including auditory or contextual awareness alone.

This phenomenon establishes a precedent for partial coupling between otherwise independent cognitive systems. The relevance to the present experiment is not that perceptual convergence must arise from similar coupling, but that the independence of minds does not entail isolation. As such, observed convergence in perceptual interpretation need not invoke metaphysical explanations to be considered scientifically plausible.

The Frankenobject Test therefore situates perceptual convergence within an empirically grounded landscape, where degrees of coupling, constraint, and shared structure remain open to investigation rather than assumption. (e.g., Provine, 1986; Platek et al., 2003; Norscia & Palagi, 2011).

8. Implications

If perception converges beyond expectation, this has implications for:

theories of consciousness

the nature of shared reality

the role of language in stabilizing experience

whether awareness completes reality or merely interprets it

The Frankenobject Test does not answer these questions. It exposes where current assumptions may be insufficient.

9. Conclusion

The question of whether reality is independently reconstructed or jointly accessed remains unresolved not due to complexity, but due to lack of appropriately framed tests. The Frankenobject Test offers a minimal, repeatable experiment capable of provoking meaningful divergence in interpretation — and, potentially, in theory.

Appendix A: Participant Baseline Assessment

A.1 Mechanical Familiarity Index (MFI)

Participants rate each statement on a 1–5 Likert scale

(1 = strongly disagree, 5 = strongly agree)

1. I regularly use hand tools or power tools.

2. I can identify common mechanical components (e.g., valves, gauges, fittings).

3. I often repair, modify, or assemble physical devices.

4. I feel comfortable inferring how an unfamiliar mechanical object might function.

5. I have professional, vocational, or hobby experience with mechanical or technical systems.

6. I enjoy understanding how machines or tools work.

MFI score: sum of items (range 6–30)

A.2 Parts Recognition Score (PRS)

Participants are shown 6 images (or simple line drawings) of common components and asked to identify them (free response or multiple choice).

Example components:

hose coupling

pressure gauge

nozzle

on/off switch

metal bracket

wheel or caster

PRS score: number correctly identified (range 0–6)

(If you want ultra-minimal: reduce to 4 items.)

A.3 Cognitive Approach Tag (CAT)

Single forced-choice question:

When encountering an unfamiliar object, I most naturally focus on:

A) shape and visual features

B) how it might function

C) where or how it would be used

D) emotional or intuitive impressions

CAT is used descriptively, not as a numerical score.

Stratification Procedure

Participants are stratified post hoc into three familiarity tiers based on combined MFI and PRS scores:

Low Familiarity

Medium Familiarity

High Familiarity

Stratification is used to examine whether perceptual divergence correlates with prior experience or whether convergence persists across tiers.

Methods Text

Participant Baseline Measures

To account for individual differences in prior experience that may influence perceptual inference, participants completed a brief baseline assessment prior to the Frankenobject Test. This included a Mechanical Familiarity Index (MFI), a short self-report measure assessing exposure to and comfort with mechanical systems, and a Parts Recognition Score (PRS), an objective micro-test assessing recognition of common mechanical components.

Participants were stratified into low, medium, and high familiarity tiers based on combined MFI and PRS scores. This stratification was not used to exclude participants or normalize responses, but to examine whether perceptual divergence could be explained by individual priors (supporting local construction models) or whether convergence persisted across familiarity levels (supporting a shared perceptual substrate).

An additional Cognitive Approach Tag (CAT) was collected to characterize participants’ dominant perceptual orientation (feature-based, functional, contextual, or affective), enabling qualitative analysis of narrative formation across rounds.

License & Use

All works on this site are released under the Creative Commons Attribution 4.0 International (CC-BY-4.0) license.You are free to share, adapt, build upon, test, and critique this work. Attribution is required. Everything else is encouraged.

About

Thomas Kennedy

Independent Researcher, United States

ORCID: 0000-0003-9963-2517

Contact: [email protected]

This work makes no claims about metaphysics, ontology, or panpsychism—only about measurable timing constraints and functional architecture.

2. Constraint Rounds (1–4)